Simple image classification using TensorFlow and CIFAR-10

Almost one year after following cs231n online and doing the assignments, I met the CIFAR-10 dataset again.

This time, instead of implementing my Convolutional Neural Network from scratch using numpy, I had to implement mine using TensorFlow, as part of one of the Deep Learning Nano Degree assignments.

As an aside, since this course uses some content from the free Deep

Learning course, they

took the time to fix the sub par presentation of that course. Good. :-)

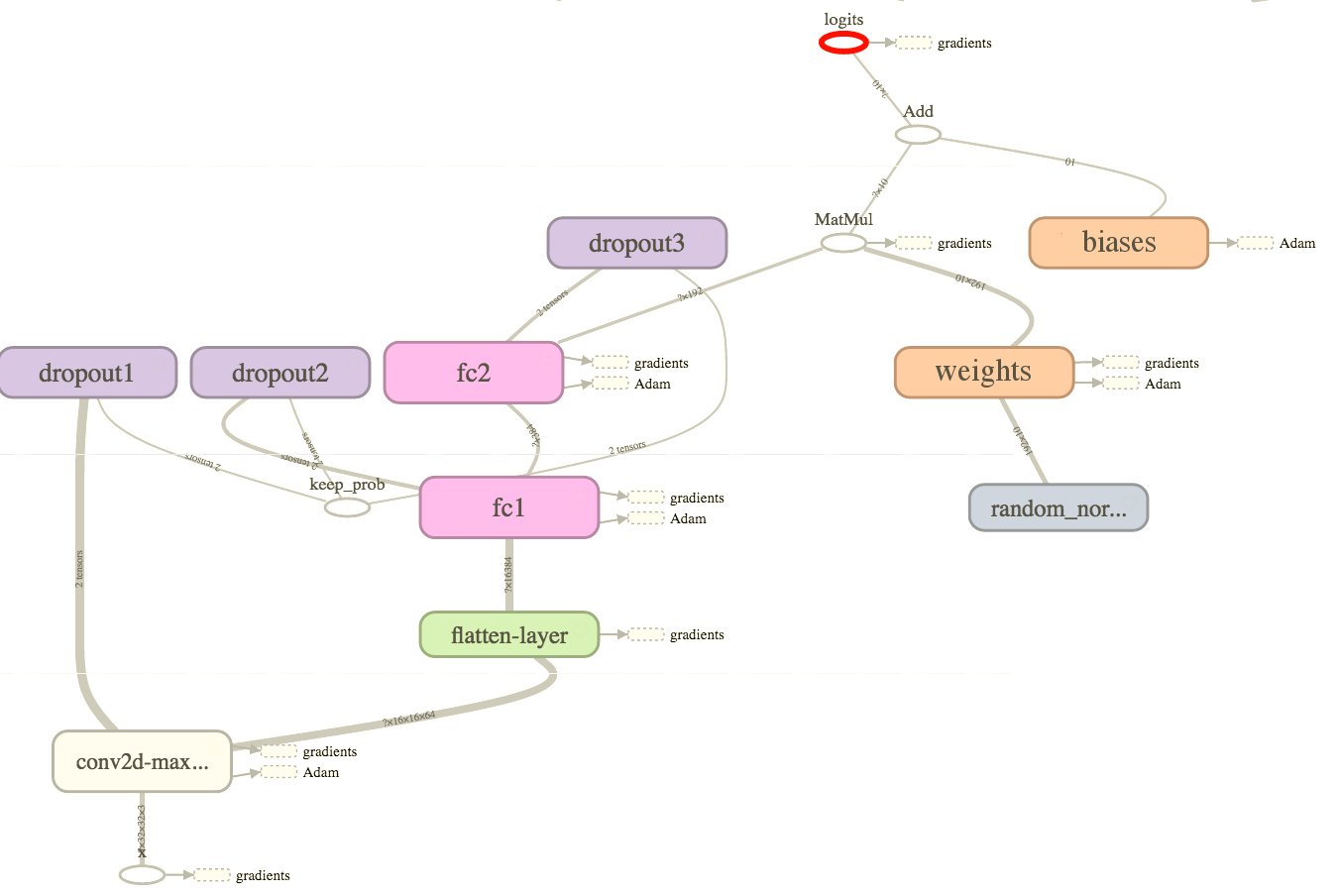

The ConvNet

What I ended up with was a quite simple ConvNet. To meet specifications we were expected to achieve at least 50% accuracy in the training, validation and test sets. So I went with a network that comprised four layers (or five, if you count pooling as a different layer):

conv2d-maxpoolwith a ReLU activation function- A convolution layer with

64kernels of size3x3followed by - A max pooling layer with of size

3x3and2x2strides

- A convolution layer with

- A fully connected layer mapping the output of the previous layer to

384outputs with a ReLU activation function - Another fully connected layer mapping the

384outputs of the previous layer to192outputs with a ReLU activation function - A final layer mapping the

192outputs to the10classes in CIFAR-10, to which SoftMax is applied.

On top of that, all layers were regularized with Dropout.

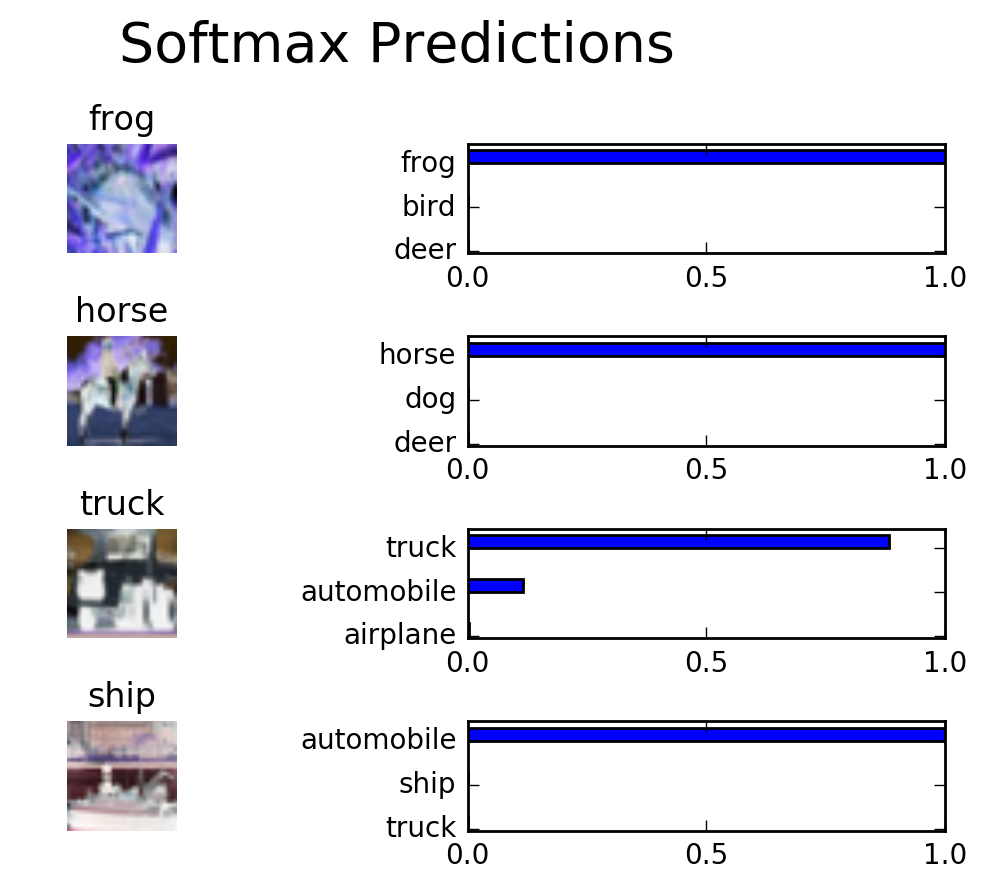

Classification Performance

This neural network, trained over 50 epochs, achieved ~66% validation accuracy and ~65% test accuracy. Pretty good for a small project.

Source code

Source code can be found on GitHub.